NestCam unlimited storage.

Introduction.

In this post I will describe how to make cheap-affordable-unlimited video storage for NestCam (a.k. DropCam). I will use built in functionality to stream video from NestCam to nest.com web page. Web page makes also possible to expose stream endpoint and thats something I will use. Because I will set up simple AWS infrastructure with one EC2 instance and docker-engine to capture stream and store it in AWS.S3 storage for later viewing.

Table Of Content.

- Disclaimer.

- Find stream endpoint.

- Automate recording with Docker.

- Store video on S3.

- Future improvements.

- Conclusion.

- P.S.

Disclaimer.

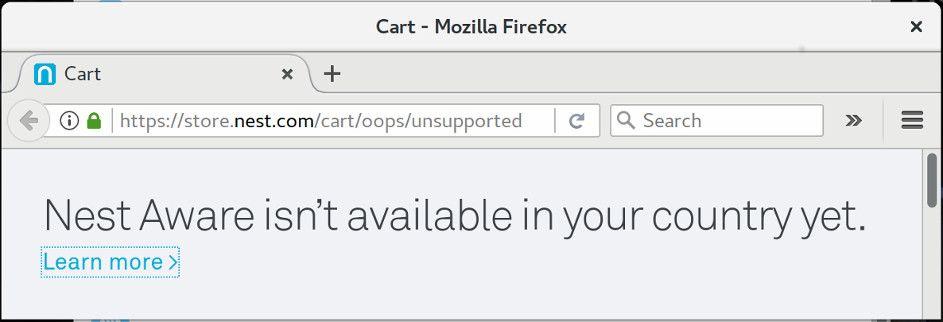

In my opinion NestCam is premium product probably best in segment if you can afford it i encorrugate you to buy subscription ( Nest Awere ) and us it as it is. Problem for me is that subscription is not available in country I live Latvia and IMO 100$ /year is too much for me. Im ready to sacrifice some features but I need long retention period for video history (without Nest Awere subscription you get 4h. history).

Find stream endpoint.

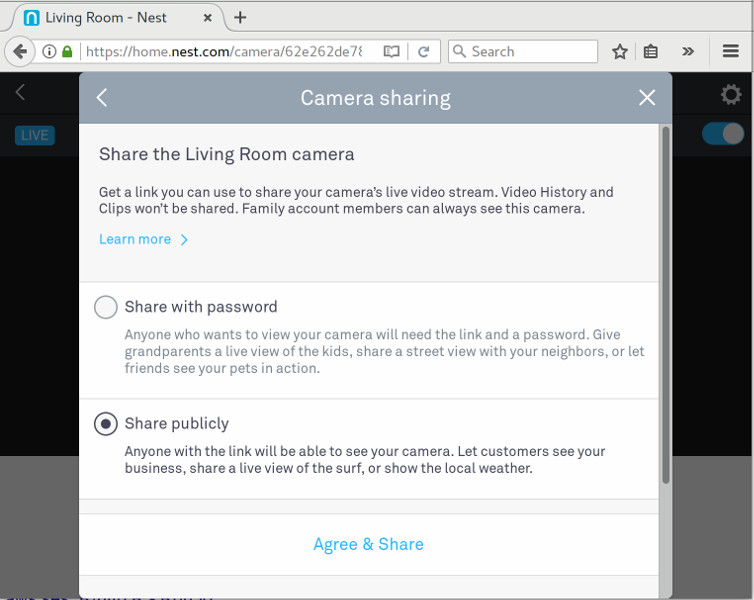

First thing we need is to expose video stream endpoint. As I mentioned NestCam by default sends all video footage to home.nest.cam page. We are going to enable access to stream endpoint in home.nest.cam page.

On home.nest.cam page in NestCam settings find Camera sharing and enable Share publicly. Save URL generated by page ( URL example : https://video.nest.com/live/GABCSDSDRdm).

Now if you open this URL you will see live stream from your NestCam display in web pages using SWF player. We need to find URL for stream endpoint (something we can reuse with other players/tools) to achieve this open Developer Tools in browser you are using and search for GET request to https://video.nest.com/api/dropcam/cameras.get_by_public_token. As response server is sending json file:

{

"status": 0,

"items": [

{

"id": 12342343247,

"live_stream_host": "stream-ire-alfa.dropcam.com:443",

"description": "",

"hours_of_free_tier_history": 3,

"uuid": "62***********************de"

". . ."

}

]

}

We are looking for items.uuid field = 62***********************de.

Using this uuid you can construct stream endpoint URL(s).

For example: in Real Time Messaging Protocol → rtmps://stream-ire-alfa.dropcam.com/nexus/62**********de or in Audio Playlist file → https://stream-ire-alfa.dropcam.comnexus_aac/62___________de/playlist.m3u8/

You can construct direct URL and open it in VLC player: Media→ Open Network Stream or (Ctrl+N) if you see live stream from your camera proceed with next step.

Automate recording with Docker.

Because VLC is not provided by default on all platforms and it has multiple dependencies we are going to install VLC in docker. As base image I will use centos:7 and VLC itself will be provided by Nux Dextop (third-party RPM repository).

Also we will run VLC under vlc user (non root) because under root we will get error:

VLC is not supposed to be run as root. Sorry. If you need to use real-time priorities and/or privileged TCP ports you can use /usr/bin/vlc-wrapper (make sure it is Set-UID root and cannot be run by non-trusted users first).

FROM centos

RUN rpm -Uvh http://li.nux.ro/download/nux/dextop/el7/x86_64/nux-dextop-release-0-1.el7.nux.noarch.rpm

RUN yum -y update && yum -y install vlc

RUN adduser vlc

USER vlc

WORKDIR /home/vlc

CMD /usr/bin/vlc -v -I dummy --run-time=60 \

--sout "#file{mux=mp4,dst=out.mp4,access=file}" \

${STREAM_URL} vlc://quit

Now if we build image and run container with provided STREAM_URL on shared volume we will find ~60sec. video recorded from our NestCam.

user$ docker build -t ogavrisevs/nestcam-min . -f Dockerfile-min

user$ docker push ogavrisevs/nestcam-min

user$ mkdir out && chown 1000:1000 out

user$ docker run -it \

--user vlc \

-v $(pwd)/out:/home/vlc

-e "STREAM_URL=https://stream-ire-alfa.dropcam.com/nexus_aac/62****************de/playlist.m3u8" \

ogavrisevs/nestcam-min

Docker output :

VLC media player 2.2.4 Weatherwax (revision 2.2.3-37-g888b7e89)

[0000000000be1c18] pulse audio output error: PulseAudio server connection failure: Connection refused

[0000000000bef458] core interface error: no suitable interface module

[0000000000af5148] core libvlc error: interface "globalhotkeys,none" initialization failed

[0000000000bef458] dbus interface error: Failed to connect to the D-Bus session daemon: Unable to autolaunch a dbus-daemon without a $DISPL$

Y for X11

[0000000000bef458] core interface error: no suitable interface module

[0000000000af5148] core libvlc error: interface "dbus,none" initialization failed

[0000000000bef458] dummy interface: using the dummy interface module...

[00007f773452c6c8] httplive stream: HTTP Live Streaming (stream-ire-alfa.dropcam.com/nexus_aac/62******************************de/playlist.m3$

8)

[00007f7734425648] ts demux warning: first packet for pid=256 cc=0xf

[00007f773452c6c8] httplive stream warning: playback in danger of stalling

You can open out.mp4 file and ensure footage is stored.

Store video on S3.

Next thing we need is place where to execute our container and storage of video. Im going to set up one AWS.EC2 instance and as storage I will use AWS.S3 because its cheep durable with simple API we can hook up latter. As alternative you can choose any other container cluster/hosting (here is example of running our container in kubernetes : K8S-job-service )

Im going to use Amazon Linux AMI optimized for AWS.ECS (read: preinstalled with docker 1.12 as of this date). Check documentation for other regions http://docs.aws.amazon.com/AmazonECS if you dont want to spin up instance in Frankfur (closest to me) chose other region and AMI id.

Lest create security group first:

user$ aws --profile=ec2-user --region eu-central-1 \

ec2 create-security-group \

--group-name ssh \

--description "Allow all incoming ssh"

{

"GroupId": "sg-38a61553"

}

And allow ssh for this SG:

user$ aws --profile=ec2-user --region eu-central-1 \

ec2 authorize-security-group-ingress \

--group-name ssh --protocol tcp --port 22 --cidr 0.0.0.0/0

Now lets spin-up instance :

user$ aws --profile=ec2-user --region eu-central-1 \

ec2 run-instances \

--image-id ami-085e8a67 --count 1 --instance-type t2.micro \

--key-name oskarsg --security-group-ids sg-38a61553

As out put of command we will get InstanceId:

{

"OwnerId": "716976692685",

"ReservationId": "r-08c941c138b9e3a71",

"Groups": [],

"Instances": [

{

"Monitoring": {

"State": "disabled"

},

". . . .":,

"InstanceId": "i-0b0d076b39211d3b6",

"ImageId": "ami-085e8a67"

}

]

}

And with InstanceId we can get public IP :

user$ aws --profile=ec2-user --region eu-central-1 \

ec2 describe-instances \

--instance-ids i-0b0d076b39211d3b6 | grep PublicDnsName | head -n 1

"PublicDnsName": "ec2-52-28-83-190.eu-central-1.compute.amazonaws.com"

Now if we run container video file will be saved on host what we want is to send file to AWS.S3 Bucket. Lets create bucket:

user$ aws --profile=s3-user --region=eu-central-1 \

s3api create-bucket --bucket sec-cam \

--create-bucket-configuration LocationConstraint=eu-central-1

{

"Location": "http://sec-cam.s3.amazonaws.com/"

}

And lets update our Dockerfile with awscli tools to sync file from container to AWS.S3.Bucket. Of course we are not going to stores stream URL inside container but will paste it as ENV variable. Also we will pass as ENV var. AWS user id and token to write in bucket (it can be simplified by setting up IAM role for EC2 instance) but for now we will explicitly specify user/token.

FROM centos

RUN rpm -Uvh http://li.nux.ro/download/nux/dextop/el7/x86_64/nux-dextop-release-0-1.el7.nux.noarch.rpm

RUN yum -y install epel-release

RUN yum -y update && yum -y install vlc awscli

RUN easy_install six

RUN adduser vlc

COPY run.sh /home/vlc/run.sh

RUN chown vlc:vlc /home/vlc/run.sh

RUN chmod 760 /home/vlc/run.sh

USER vlc

WORKDIR /home/vcl

CMD cd && ./run.sh

#!/bin/bash

# debug me

#set -x

RUN_TIME="${RUN_TIME:-60}"

BUCKET_SUB_FOLDER="${BUCKET_SUB_FOLDER:-device-id}"

export AWS_DEFAULT_REGION="${AWS_DEFAULT_REGION:-eu-central-1}"

export S3_USE_SIGV4="True"

OUTPUT_DIR="${OUTPUT_DIR:-/home/vlc/to_cloud}"

mkdir -p $OUTPUT_DIR

DATE=`date +%Y_%m_%d`

TIME=`date +%H_%M_%S`

FILE="${DATE}_${TIME}.mp4"

/usr/bin/vlc -v -I dummy --run-time=$RUN_TIME \

--sout "#file{mux=mp4,dst=${OUTPUT_DIR}/${FILE},access=file}" \

$STREAM_URL vlc://quit

# Check file exists

if [ ! -f "${OUTPUT_DIR}/${FILE}" ]; then

echo "File not found! :: ${OUTPUT_DIR}/${FILE} "

exit 1

fi

# Check file size

minimumsize=500000

actualsize=$(wc -c <"${OUTPUT_DIR}/${FILE}")

if [ $actualsize -ge $minimumsize ]; then

aws s3 cp $OUTPUT_DIR/$FILE s3://sec-cam/$BUCKET_SUB_FOLDER/$DATE/$FILE

else

echo "file size is less then $minimumsize bytes"

exit 1

fi

After last build our newest container we are ready to lunch container

user$ docker build -t ogavrisevs/nestcam .

user$ docker push ogavrisevs/nestcam

user$ docker run -d –restart=always \

-e "AWS_ACCESS_KEY_ID=AK************8OQ" \

-e "AWS_SECRET_ACCESS_KEY=V9**********************0m" \

-e "STREAM_URL=https://stream-ire-alfa.dropcam.com/nexus_aac/62***********************de/playlist.m3u8"\

ogavrisevs/nestcam

Notice we use -d and --restart=always this way we are running container without tty attached and docker-engine will restart container each time it stops (once in 60 sec.).

Future improvements.

You will notice that sometimes when there is no motion, stream on home.nest.cam endpoint is closed this will result not only in error on container but also in increased delays in container restarts which can result in some missing video footage because recording in container is not running. Better solution can be listening on NestCam Event API and start recording from stream only when we are sure stream is open. If we can record stream only when its open we can record much longer video footage till moment event is over nice thing to have would be to send video to AWS.S3 storage in parallel with recording. This approach can give possibility to start viewing event on user device while event on NestCam is still happening.

Conclusion.

As we can see from this blog post its possible to store video footage with unlimited retention period (~0.023 usd / 1 GB / month). Despite fact resource requirements for VLC process are very small (~ 80 Mb/RAM , ~1/8 of 1CPU unit + ~ 20 kbit/s NET) we still need to power up docker-engine and T2.Nano (1x vCPU, 0.5 GiB) is not enough so we need to utilize bigger instance T2.Micro (1x vCPU, 1GiB), bigger instance bigger bill by end of month ( 10.25 usd /month ). Solution can be to create cluster where we can run multiple containers (probably hundreds ) per multiple instances (read: Apache Mesos, Kubernetes, Docker Swarm).

P.S.

If you think its too much hassle to set up stream recording and still you want it to be stupid cheap ping me because im considering building service where everything will be already set up out-of-box. All you need to do is give me stream URL and i will provide:

- Web interface where you can view footage.

- Automatic archiving very old footage (not deleting) but sending to AWS.Glacier.

- Encryption video files on AWS.S3 (some auto generated GPG key ? ) where only client can access files.

- Record only wen stream endpoint is open (respond on API.Events).